I’m simultaneously a behavioral researcher, an ethicist, and a

hopeless Facebook addict, so I’ve been thinking a lot about last week’s

controversial study (Kramer

et al, PNAS 2014) in which researchers manipulated the emotional content of

689,003 Facebook users’ News Feeds. In summary, users who saw fewer of their

friends’ posts expressing negative emotions went on to express more positive

and fewer negative emotions in their own posts, while users who saw fewer posts

expressing positive emotions went on to express more negative and fewer

positive emotions in their posts.

This provides evidence for “emotional contagion” through

online social networks—that we feel better when exposed to other people’s

positive emotions, and worse when exposed to negative emotions. This finding

isn’t obvious, since some

have suggested that seeing other people’s positive posts might make us feel

worse if our own lives seem duller or sadder in comparison.

The journal and authors clearly did not anticipate a wave

of online criticism condemning the study as unethical. (Full disclosure: one

of the authors is also a researcher at UCSF, though I don’t think I’ve ever met

her.) In particular, the authors claimed that participants agreed to Facebook’s

Data Use Policy when they created their Facebook accounts, and this constituted

informed consent to research. Looking at the Common

Rule governing research on human subjects, it’s clear that the requirements

of informed consent (including a description of the purposes of the research, expected

duration, risks/benefits, compensation for harms…) are not met just because

subjects click “Agree” to this sort of blanket terms of use. There are exceptions

to these requirements, and some people have suggested that the research could

have qualified

for a waiver of informed consent if the researchers had applied for one. I’m not so sure about that argument, and in any case the researchers hadn’t.

While the online discussion about this study has been

fascinating, I think there are a few points that haven’t

received as much attention as I think they deserve:

1. This controversy

is taking place against a backdrop of more critical scrutiny of informed

consent among ethicists.While bioethicists in general have traditionally

advocated strong informed consent requirements in the name of participant

protection and self-determination, in recent years there has been an opposite

trend emphasizing that such requirements are burdensome and may inhibit

important research. Last year we saw a controversy over a neonatal ICU clinical

trial, in which many

experts criticized

regulators’ strict interpretations of informed consent. More recently, some prominent figures

have suggested that informed consent requirements in medical research should be relaxed,

particularly for “big data” studies made possible by electronic medical records

and coordinated health systems. Much like with the Facebook study, these

researchers and ethicists believe that new technologies hold promise for new

ways of conducting research to promote health, and worry that these

possibilities may be closed off by our existing ethical frameworks.

2. What’s “research”?One irony

of this controversy is that Facebook manipulates users’ News Feeds all the time—they

don’t show you everything that your friends post, but use a filter that they’re

always tweaking “in the interest of showing viewers the content they will find

most relevant and engaging.” (In other words: so that you’ll keep coming back

and they can show you ads.) These tech firms take a relentlessly empirical

approach to everything. Google once ran 41 experiments to

figure out which

shade of blue made users more likely to click on ads.

These activities aren’t categorized as research falling under

the Common

Rule, as typically construed, because they’re not designed to develop or

contribute to generalizable knowledge. So, e.g., if Facebook had a purely

internal purpose for figuring out how to manipulate users’ emotions by

adjusting the News Feed filter, this controversy wouldn’t have arisen. What

opens them up to ethical criticism here is that they tried to answer a more general

scientific question and published their findings in PNAS.

As others have pointed out, this division creates weird

disincentives. Experiments on Facebook users to improve Facebook’s own

processes and make more money don’t receive this level of scrutiny, but experiments to

answer general questions (and that are publicized in scientific journals) do.

But the risks to subjects are probably greater in the first case, since Facebook

will keep those studies and findings proprietary, and the social benefits are

smaller.

3. Social

media and technology are creeping us out.I agree with the

critics of the study that it didn’t meet existing requirements for informed

consent in research; however, I don’t think that’s why people have responded to

the story as they have. As in most cases, “the scandal isn’t what’s

illegal, but what’s legal.” In other words, what many of us really want to know

is: what other experiments are Facebook/Google/Apple/Amazon/Samsung running on me that they’re

not required to tell me about?

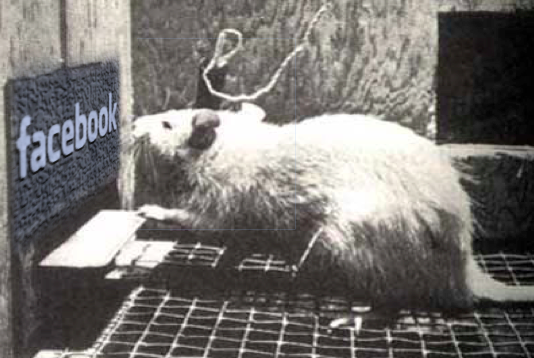

Like many people, I have complicated and ambivalent feelings

about my dependence on these technology giants. As a researcher, I think that

these feelings are a defining feature of our modern lives, and would be worthy topics for further empirical study. In a sense, the broad outcry

over this study is an illustration of why such research is so important, though

I worry it will have the unintended result of making future academic-industry

collaborations in this space less likely.

by: Winston Chiong